7. PyTorch

Pytorch is a framework for doing differentition, optimization, and in particular optimization of neural networks (therefore, sometimes just called a deep learning framework) using the native imperative language style of Python.

7.1. Some optional information about Pytorch and how it differs from its competitors

Most deep learning frameworks like TensorFlow and Theanos work with symbolic differentian and therefore it is necessary to declare the model structure before actually supplying any data and then ask the framework to compile the model (having the gradients calculated symbolically), after which point, the model will be good to go as long it does not change.

While those have advantages like possible algebraic simplifications when working out the derivates, they also come at some price: they impose a necessary "recompilation" of the model if it changes, they make code less intuitive to writting and more difficult to debug, are potentially more restrictive on model characteristics.

On the other hand, the framework of choice for this section, called PyTorch works with reverse-mode automatic differentiation which consists of creating the chain of differentiation (on a "tape") on-the-fly. That is, after the last operation is done (in our case, that’s the calculation of the loss), the chain of operations is back-propagated (i.e.: running the "tape" backwards) and the gradients of the parameters of interest are calculated using chain rule.

Frameworks which works using symbolic differention are often called static while the ones that use automatic differentiation are called dynamic. Regardless of this, most (if not all) of those deep learning framework have two common characteristic that are worth emphasizing: they allow one to use its differentiation facilities to work with problems other than deep learning, neural networks or optimization (e.g.: Markov Chain Monte Carlo and Bayesian inference) and they natively support GPU acceleration (generally, using Nvidia CUDA).

The reason GPU acceleration is a common denominator over the deep learning frameworks is due to the fact that neural networks are strongly parallelizable problems and this make them well suited for GPU acceleration. This thus explain, at least in part, their recent surge in popularity given the scalability properties of such methods to big datasets, which on the other hand, are getting increasingly common and important.

7.2. Now getting started

First of all, let’s start by importing some basic stuff

[1]:

import numpy as np

import scipy.stats as stats

import pandas as pd

import matplotlib.pyplot as plt

import torch

import torch.nn as nn

import torch.optim as optim

from torch.nn import functional as F

from torch.utils.data import random_split, TensorDataset, DataLoader

import pickle

%matplotlib inline

7.3. Tensors and GPU

Now, let me present you with the basic storage type of Pytorch: the tensors. They work in very similar fashion to the numpy arrays.

[2]:

x = torch.tensor([.45, .53])

y = x**2

y[0] = .3

a = torch.ones((2,4))

a = a * 2

a = a + 1

a[0,0] = .4

b = torch.zeros((4,2))

b = (b + 3) / 2

b[0,1] = .2

bt = b.transpose(0,1) # transpose

a + bt

torch.mm(a,b) # matrix multiplication

[2]:

tensor([[14.1000, 13.5800],

[18.0000, 14.1000]])

But Pytoch tensors have a special caveat: they can live on the GPU (if you have one proper installed… if you don’t, then chances are that your computer might explode if you try to run this)!

[3]:

a = torch.rand((3, 5)) # some random numbers

if torch.cuda.is_available():

a = a.cuda()

b = torch.ones((5, 3))

if torch.cuda.is_available():

b = b.cuda()

b = (b + 3) / 2

b[0,1] = .2

torch.mm(a, b) # matrix multiplication

[3]:

tensor([[4.5492, 3.3939, 4.5492],

[4.3107, 2.7360, 4.3107],

[6.2844, 6.2774, 6.2844]], device='cuda:0')

7.4. Float data type

Note that the default data type of Pytorch floats is 32 bit precision type:

[4]:

torch.rand((3, 5)).dtype

[4]:

torch.float32

While on numpy the default is 64 bit precision type:

[5]:

np.arange(4).dtype

[5]:

dtype('int64')

You can pass a custom data type dtype function parameter or with special constructors:

[6]:

print(torch.rand((3, 5), dtype=torch.float64).dtype)

print(torch.FloatTensor([.5]).dtype)

print(torch.HalfTensor([.5]).dtype)

print(torch.DoubleTensor([.5]).dtype)

torch.float64

torch.float32

torch.float16

torch.float64

But it’s recommended to use the default float32 for most deep learning applications due to the speed up that it can give on vectorized operations, specially on GPUs.

7.5. Differentiation

We can use Pytorch to do numerical differention using the code below:

[7]:

x = torch.tensor([.45], requires_grad=True)

y = x**2

y.backward()

x.grad

[7]:

tensor([0.9000])

x.grad gives us the gradient of y with respect to x. Now pay attetion at (and play with) this other example:

[8]:

x = torch.tensor([.5,.3,.6], requires_grad=True)

y = x**2

z = y.sum()

z.backward()

x.grad

[8]:

tensor([1.0000, 0.6000, 1.2000])

7.6. Optimization

Now let’s try using this integration framework for optimization:

[9]:

x = torch.tensor([.45], requires_grad=True)

# "declares" that x is the variable being optimized by the Adam optimization algorith

optimizer = optim.Adam([x])

y = 2 * x**2 - 7 * x

y.backward() # "declares" that y is value being minimized

optimizer.step() # i.e. find the x that minimizes y

x

[9]:

tensor([0.4510], requires_grad=True)

Here optimizer.step() moved x in the direction of its gradient; i.e. it moved x in the direction of that minimizes y.

(Note that requires_grad=True is necessary in order to Pytorch to now that it must keep track of the operations done with x to backpropagate it back in the future to get the gradient of x, it requires you to do so manually because otherwise it could save computational resources by not creating this structures).

However, this is just a little step towards optimization, we must repeat this many time to get there:

[10]:

x = torch.tensor([-2.45], requires_grad=True)

optimizer = optim.Adam([x], lr=0.05)

for _ in range(1000):

optimizer.zero_grad()

y = 2 * x**2 - 7 * x

y.backward()

optimizer.step()

print("Numerical optimization solution:", x)

print("Analytic optimization solution:", 7/4)

Numerical optimization solution: tensor([1.7500], requires_grad=True)

Analytic optimization solution: 1.75

Great, we did it! Now let’s try something more difficult, given i.i.d. Gaussian samples, let’s try to \(\hat{\mu}\) such that it minimize the sum of the squared errors of those samples and \(\hat{\mu}\). We know from Statistical theory that the analytical solutional for this is the sample average.

[11]:

mu_hat = torch.tensor([0.1], requires_grad=True)

mu_true = 1.5

x = stats.norm.rvs(size=2000, loc=mu_true, scale=3, random_state=0)

x = torch.as_tensor(x, dtype=torch.float32)

optimizer = optim.Adam([mu_hat], lr=0.05)

criterion = nn.MSELoss()

for _ in range(1000):

optimizer.zero_grad()

loss = criterion(x, mu_hat)

loss.backward()

optimizer.step()

print("Numerical optimization solution:", mu_hat)

print("Analytic optimization solution:", x.mean())

Numerical optimization solution: tensor([1.4525], requires_grad=True)

Analytic optimization solution: tensor(1.4525)

/home/marco/miniforge3/lib/python3.8/site-packages/torch/nn/modules/loss.py:446: UserWarning: Using a target size (torch.Size([1])) that is different to the input size (torch.Size([2000])). This will likely lead to incorrect results due to broadcasting. Please ensure they have the same size.

return F.mse_loss(input, target, reduction=self.reduction)

And voila! It worked again!

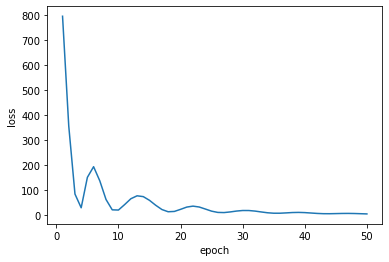

7.7. Neural networks with Pytorch

Now probably the most expected part, your first neural network with Pytorch:

[12]:

# Declares the structure of our neural network

class Net(nn.Module):

def __init__(self):

# this is strictly necessary!

super(Net, self).__init__()

# fully connected layer with input of size 10 and output of size 120

self.fc1 = nn.Linear(90, 5000)

# fully connected layer with input of size 10 and output of size 120

self.fc2 = nn.Linear(5000, 1000)

# fully connected layer with input of size 10 and output of size 120

self.fc3 = nn.Linear(1000, 1)

self.relu = nn.ReLU()

def forward(self, x):

x = self.relu(self.fc1(x))

x = self.relu(self.fc2(x))

x = self.fc3(x)

return x

net1 = Net() # Construct the neural network object

# Creates some data using a linear regression of cosines

torch.manual_seed(1)

beta = torch.rand(90, 1)

train_inputv = torch.randn(700, 90)

train_target = torch.mm(torch.cos(train_inputv), beta)

train_target = train_target + torch.randn(700, 1)

# If a GPU is available, move the network parameters and data into it

if torch.cuda.is_available():

net1.cuda()

train_inputv = train_inputv.cuda()

train_target = train_target.cuda()

criterion = nn.MSELoss()

optimizer = optim.Adam(net1.parameters(), lr=0.001)

print("Starting optimization.")

losses = []

net1.train()

for epoch in range(50):

optimizer.zero_grad()

output = net1(train_inputv)

loss = criterion(output, train_target)

print('\r'+' '*1000, end='', flush=True)

print('\rLoss', np.round(loss.item(), 2), 'in epoch', epoch + 1, end='', flush=True)

loss.backward()

optimizer.step()

losses.append(loss.item())

print("\nOptimization finished.")

plt.plot(range(1, len(losses)+1), losses)

plt.xlabel('epoch')

plt.ylabel('loss')

None

Starting optimization.

Loss 5.02 in epoch 50

Optimization finished.

7.8. Evaluation on test set

Now let’s create more data from the same linear regression and see how well our network is able to predict it:

[13]:

# Moves the network back to the CPU if it was on a GPU.

net1.cpu()

# Since we are not training the network anymore, let's put in

# evaluation mode which is faster. In case you need to train it

# again, call net1.train()

net1.eval()

# Creates some data using a linear regression

torch.manual_seed(2)

test_inputv = torch.randn(200, 90)

test_target = torch.mm(torch.cos(test_inputv), beta)

test_target = test_target + torch.randn(200, 1)

with torch.no_grad():

predicted_values = net1(test_inputv)

loss_on_test = criterion(predicted_values, test_target).item()

print(f"loss on test dataset: {loss_on_test}")

loss on test dataset: 23.99188995361328

Exercise: try decreasing and increasing the amount of training data and see if this error goes down! Also try to change the number of features to see how it affects the error.

7.9. TPUs

In addition to GPUs, it’s possible to train PyTorch models using Google TPUs (which are available for free on Google Colab) the code below will automatically detect if a TPU is available in the current enviroment and proceed with installing the required depedencies if available.

For more information about using PyTorch with TPUs, visit: http://pytorch.org/xla/release/1.8/index.html

[14]:

import os

import subprocess

if os.environ.get('COLAB_TPU_ADDR') is not None:

# os.environ['XLA_USE_BF16'] = '1'

pip_cmd = 'pip install cloud-tpu-client==0.10 '

pip_cmd += 'https://storage.googleapis.com/tpu-pytorch/wheels/torch_xla-1.8.1-cp37-cp37m-linux_x86_64.whl'

subprocess.run(pip_cmd, shell=True, capture_output=True, check=True)

import torch_xla

import torch_xla.core.xla_model as xm

7.10. Batch training

There seems to be some overfitting here, let’s work that out by applying batch training:

[15]:

if os.environ.get('COLAB_TPU_ADDR') is not None:

device = xm.xla_device()

elif torch.cuda.is_available():

device = torch.device('cuda')

else:

device = torch.device('cpu')

net1.to(device)

train_inputv = train_inputv.cpu()

train_target = train_target.cpu()

criterion = nn.MSELoss()

optimizer = optim.Adam(net1.parameters(), lr=0.001)

print("Starting optimization.")

losses = []

net1.train()

for epoch in range(300):

pytorch_dataset = TensorDataset(train_inputv, train_target)

dataset_loader = DataLoader(

pytorch_dataset, batch_size=100, shuffle=True,

pin_memory=device.type=='cuda', drop_last=True

)

batch_losses = []

for batch_inputv, batch_target in dataset_loader:

batch_inputv = batch_inputv.to(device)

batch_target = batch_target.to(device)

optimizer.zero_grad()

output = net1(batch_inputv)

batch_loss = criterion(output, batch_target)

batch_loss.backward()

optimizer.step()

batch_losses.append(batch_loss.item())

loss = np.mean(batch_losses)

losses.append(loss)

if not (epoch+1) % 25:

print('\r'+' '*1000, end='', flush=True)

print('\rLoss', np.round(loss.item(), 2), 'in epoch', epoch + 1, end='', flush=True)

print("\nOptimization finished.")

# Evaluation on test set

net1.cpu()

net1.eval()

with torch.no_grad():

predicted_values = net1(test_inputv)

loss_on_test = criterion(predicted_values, test_target).item()

print(f"Loss on test dataset: {loss_on_test}")

Starting optimization.

Loss 0.05 in epoch 300

Optimization finished.

Loss on test dataset: 26.671913146972656

7.11. Dropout, batch normalization and ELU activation

That’s an improvement, but but there still seems to be a lot of overfitting, let’s work that out by applying dropout, batch normalization and using ELU activation:

[16]:

# Declares the structure of our neural network

class Net(nn.Module):

def __init__(self):

# this is strictly necessary!

super().__init__()

self.layers = nn.Sequential(

nn.Linear(90, 5000),

nn.ELU(),

nn.BatchNorm1d(5000),

nn.Dropout(),

nn.Linear(5000, 1000),

nn.ELU(),

nn.BatchNorm1d(1000),

nn.Dropout(),

nn.Linear(1000, 1),

)

def forward(self, x):

x = self.layers(x)

return x

net2 = Net() # Construct the neural network object

[17]:

if os.environ.get('COLAB_TPU_ADDR') is not None:

device = xm.xla_device()

elif torch.cuda.is_available():

device = torch.device('cuda')

else:

device = torch.device('cpu')

net2.to(device)

train_inputv = train_inputv.cpu()

train_target = train_target.cpu()

criterion = nn.MSELoss()

optimizer = optim.Adam(net2.parameters(), lr=0.001)

print("Starting optimization.")

losses = []

net2.train()

for epoch in range(300):

pytorch_dataset = TensorDataset(train_inputv, train_target)

dataset_loader = DataLoader(

pytorch_dataset, batch_size=100, shuffle=True,

pin_memory=device.type=='cuda', drop_last=True

)

batch_losses = []

for batch_inputv, batch_target in dataset_loader:

batch_inputv = batch_inputv.to(device)

batch_target = batch_target.to(device)

optimizer.zero_grad()

output = net2(batch_inputv)

batch_loss = criterion(output, batch_target)

batch_loss.backward()

optimizer.step()

batch_losses.append(batch_loss.item())

loss = np.mean(batch_losses)

losses.append(loss)

if not (epoch+1) % 25:

print('\r'+' '*1000, end='', flush=True)

print('\rLoss', np.round(loss.item(), 2), 'in epoch', epoch + 1, end='', flush=True)

print("\nOptimization finished.")

# Evaluation on test set

net2.cpu()

net2.eval()

with torch.no_grad():

predicted_values = net2(test_inputv)

loss_on_test = criterion(predicted_values, test_target).item()

print(f"Loss on test dataset: {loss_on_test}")

Starting optimization.

Loss 4.24 in epoch 300

Optimization finished.

Loss on test dataset: 8.125945091247559

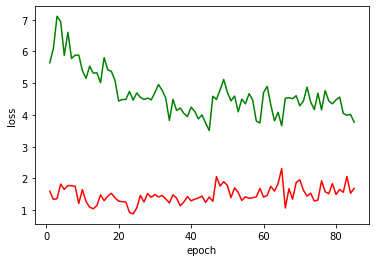

7.12. Early stopping

It’s even better now! So let’s create an early stopping system to choose the number of epochs to stop training.

[18]:

if os.environ.get('COLAB_TPU_ADDR') is not None:

device = xm.xla_device()

elif torch.cuda.is_available():

device = torch.device('cuda')

else:

device = torch.device('cpu')

net2.to(device)

train_inputv = train_inputv.cpu()

train_target = train_target.cpu()

criterion = nn.MSELoss()

optimizer = optim.Adam(net2.parameters(), lr=0.001)

print("Starting optimization.")

train_losses = []

val_losses = []

# db split

val_idx = np.random.choice(len(train_inputv), len(train_inputv)//10, False)

train_idx = [x for x in range(len(train_inputv)) if x not in val_idx]

val_inputv = train_inputv[val_idx]

val_target = train_target[val_idx]

subtrain_inputv = train_inputv[train_idx]

subtrain_target = train_target[train_idx]

# initial values for early stopping decision state parameters

last_val_loss = np.inf

es_tries = 0

for epoch in range(100_000):

# network training step

net2.train()

pytorch_dataset_train = TensorDataset(subtrain_inputv, subtrain_target)

dataset_loader_train = DataLoader(

pytorch_dataset_train, batch_size=100, shuffle=True,

pin_memory=device.type=='cuda', drop_last=True

)

batch_losses = []

for batch_inputv, batch_target in dataset_loader_train:

batch_inputv = batch_inputv.to(device)

batch_target = batch_target.to(device)

optimizer.zero_grad()

output = net2(batch_inputv)

batch_loss = criterion(output, batch_target)

batch_loss.backward()

optimizer.step()

batch_losses.append(batch_loss.item())

loss = np.mean(batch_losses)

train_losses.append(loss)

if not (epoch+1) % 25:

print('\r'+' '*1000, end='', flush=True)

print('\rTrain loss', np.round(loss.item(), 2), end='')

# network evaluation step

net2.eval()

with torch.no_grad():

pytorch_dataset_val = TensorDataset(val_inputv, val_target)

dataset_loader_val = DataLoader(

pytorch_dataset_val, batch_size=100, shuffle=False,

pin_memory=device.type=='cuda', drop_last=False,

)

batch_losses = []

batch_sizes = []

for batch_inputv, batch_target in dataset_loader_val:

batch_inputv = batch_inputv.to(device)

batch_target = batch_target.to(device)

output = net2(batch_inputv)

batch_loss = criterion(output, batch_target)

batch_losses.append(batch_loss.item())

batch_sizes.append(len(batch_inputv))

loss = np.average(batch_losses, weights=batch_sizes)

val_losses.append(loss)

if not (epoch+1) % 25:

print(' | Validation loss', np.round(loss.item(), 2), 'in epoch', epoch + 1, end='')

# Decisions based on the evaluated values

if loss < last_val_loss:

best_state_dict = net2.state_dict()

best_state_dict = pickle.dumps(best_state_dict)

es_tries = 0

last_val_loss = loss

else:

if es_tries in [20, 40]:

net2.load_state_dict(pickle.loads(best_state_dict))

if es_tries >= 60:

net2.load_state_dict(pickle.loads(best_state_dict))

break

es_tries += 1

if not (epoch+1) % 25:

print(' | es_tries', es_tries, end='', flush=True)

print(f"\nOptimization finished in {epoch+1} epochs.")

# Evaluation on test set

net2.cpu()

net2.eval()

with torch.no_grad():

predicted_values = net2(test_inputv)

loss_on_test = criterion(predicted_values, test_target).item()

print(f"Loss on test dataset: {loss_on_test}")

Starting optimization.

Train loss 4.69 | Validation loss 1.32 in epoch 75 | es_tries 51

Optimization finished in 85 epochs.

Loss on test dataset: 8.442521095275879

[19]:

plt.plot(range(1, len(train_losses)+1), train_losses, color='green')

plt.plot(range(1, len(train_losses)+1), val_losses, color='red')

plt.xlabel('epoch')

plt.ylabel('loss')

[19]:

Text(0, 0.5, 'loss')

7.13. Classification

[20]:

# Declares the structure of our neural network

class Net(nn.Module):

def __init__(self):

# this is strictly necessary!

super().__init__()

self.layers = nn.Sequential(

nn.Linear(90, 5000),

nn.ELU(),

nn.BatchNorm1d(5000),

nn.Dropout(),

nn.Linear(5000, 1000),

nn.ELU(),

nn.BatchNorm1d(1000),

nn.Dropout(),

nn.Linear(1000, 4),

)

def forward(self, x):

x = self.layers(x)

return x

net3 = Net() # Construct the neural network object

[21]:

if os.environ.get('COLAB_TPU_ADDR') is not None:

device = xm.xla_device()

elif torch.cuda.is_available():

device = torch.device('cuda')

else:

device = torch.device('cpu')

# transform target into integers labels

train_target_label = train_target - torch.floor(train_target)

train_target_label = torch.round(train_target_label * 3)

train_target_label = torch.as_tensor(train_target_label, dtype=torch.long).flatten()

test_target_label = test_target - torch.floor(test_target)

test_target_label = torch.round(test_target_label * 3)

test_target_label = torch.as_tensor(test_target_label, dtype=torch.long).flatten()

net3.to(device)

train_inputv = train_inputv.cpu()

train_target_label = train_target_label.cpu()

criterion = nn.CrossEntropyLoss()

optimizer = optim.Adam(net3.parameters(), lr=0.001)

print("Starting optimization.")

train_losses = []

val_losses = []

# db split

val_idx = np.random.choice(len(train_inputv), len(train_inputv)//10, False)

train_idx = [x for x in range(len(train_inputv)) if x not in val_idx]

val_inputv = train_inputv[val_idx]

val_target_label = train_target_label[val_idx]

subtrain_inputv = train_inputv[train_idx]

subtrain_target_label = train_target_label[train_idx]

# initial values for early stopping decision state parameters

last_val_loss = np.inf

es_tries = 0

for epoch in range(100_000):

# network training step

net3.train()

pytorch_dataset_train = TensorDataset(subtrain_inputv, subtrain_target_label)

dataset_loader_train = DataLoader(

pytorch_dataset_train, batch_size=100, shuffle=True,

pin_memory=device.type=='cuda', drop_last=True

)

batch_losses = []

for batch_inputv, batch_target_label in dataset_loader_train:

batch_inputv = batch_inputv.to(device)

batch_target_label = batch_target_label.to(device)

optimizer.zero_grad()

output = net3(batch_inputv)

batch_loss = criterion(output, batch_target_label)

batch_loss.backward()

optimizer.step()

batch_losses.append(batch_loss.item())

loss = np.mean(batch_losses)

train_losses.append(loss)

if not (epoch+1) % 25:

print('\r'+' '*1000, end='', flush=True)

print('\rTrain loss', np.round(loss.item(), 2), end='')

# network evaluation step

net3.eval()

with torch.no_grad():

pytorch_dataset_val = TensorDataset(val_inputv, val_target_label)

dataset_loader_val = DataLoader(

pytorch_dataset_val, batch_size=100, shuffle=False,

pin_memory=device.type=='cuda', drop_last=False,

)

batch_losses = []

batch_sizes = []

for batch_inputv, batch_target_label in dataset_loader_val:

batch_inputv = batch_inputv.to(device)

batch_target_label = batch_target_label.to(device)

optimizer.zero_grad()

output = net3(batch_inputv)

batch_loss = criterion(output, batch_target_label)

batch_losses.append(batch_loss.item())

batch_sizes.append(len(batch_inputv))

loss = np.average(batch_losses, weights=batch_sizes)

val_losses.append(loss)

if not (epoch+1) % 25:

print(' | Validation loss', np.round(loss.item(), 2), 'in epoch', epoch + 1, end='')

# Decisions based on the evaluated values

if loss < last_val_loss:

best_state_dict = net3.state_dict()

best_state_dict = pickle.dumps(best_state_dict)

es_tries = 0

last_val_loss = loss

else:

if es_tries in [20, 40]:

net3.load_state_dict(pickle.loads(best_state_dict))

if es_tries >= 60:

net3.load_state_dict(pickle.loads(best_state_dict))

break

es_tries += 1

if not (epoch+1) % 25:

print(' | es_tries', es_tries, end='', flush=True)

print(f"\nOptimization finished in {epoch+1} epochs.")

# Evaluation on test set

net3.cpu()

net3.eval()

with torch.no_grad():

predicted_values = net3(test_inputv)

loss_on_test = criterion(predicted_values, test_target_label).item()

print(f"Loss on test dataset: {loss_on_test}")

Starting optimization.

Train loss 0.21 | Validation loss 2.26 in epoch 100 | es_tries 52

Optimization finished in 109 epochs.

Loss on test dataset: 1.7111738920211792

7.14. Classification with network chosen by Data splitting

[22]:

from sklearn.base import BaseEstimator

import hashlib

class CustomizableNet(nn.Module):

def __init__(self, hsize1, hsize2, nfeatures):

# this is strictly necessary!

super().__init__()

self.layers = nn.Sequential(

self._initialize_layer(nn.Linear(nfeatures, hsize1)),

nn.ELU(),

nn.BatchNorm1d(hsize1),

nn.Dropout(),

self._initialize_layer(nn.Linear(hsize1, hsize2)),

nn.ELU(),

nn.BatchNorm1d(hsize2),

nn.Dropout(),

self._initialize_layer(nn.Linear(hsize2, 4)),

)

def forward(self, x):

x = self.layers(x)

return x

def _initialize_layer(self, layer):

nn.init.constant_(layer.bias, 0)

gain = nn.init.calculate_gain('relu')

nn.init.xavier_normal_(layer.weight, gain=gain)

return layer

class NeuralNetEstimator(BaseEstimator):

def __init__(self, hsize1 = 5000, hsize2=1000, batch_size = 100, lr=0.001,

weight_decay=0, cache_dir=None):

self.hsize1 = hsize1

self.hsize2 = hsize2

self.batch_size = 100

self.lr = lr

self.weight_decay = weight_decay

self.cache_dir = cache_dir

def fit(self, x_train, y_train):

train_inputv = torch.as_tensor(x_train, dtype=torch.float32)

train_target = torch.as_tensor(y_train, dtype=torch.long)

self.net = CustomizableNet(self.hsize1, self.hsize2, train_inputv.shape[1])

if os.environ.get('COLAB_TPU_ADDR') is not None:

device = xm.xla_device()

elif torch.cuda.is_available():

device = torch.device('cuda')

else:

device = torch.device('cpu')

self.net.to(device)

train_inputv = train_inputv.cpu()

train_target = train_target.cpu()

criterion = nn.CrossEntropyLoss()

optimizer = optim.Adam(self.net.parameters(), lr=self.lr, weight_decay=self.weight_decay)

print("Starting optimization.")

self.train_losses = []

self.val_losses = []

# db split

train_idx = torch.randperm(len(train_inputv))

val_idx = train_idx[:len(train_inputv)//10]

train_idx = train_idx[len(train_inputv)//10:]

val_inputv = train_inputv[val_idx]

val_target = train_target[val_idx]

subtrain_inputv = train_inputv[train_idx]

subtrain_target = train_target[train_idx]

# initial values for early stopping decision state parameters

last_val_loss = np.inf

es_tries = 0

for epoch in range(100_000):

try:

# network training step

self.net.train()

pytorch_dataset_train = TensorDataset(subtrain_inputv, subtrain_target)

dataset_loader_train = DataLoader(

pytorch_dataset_train, batch_size=self.batch_size, shuffle=True,

pin_memory=device.type=='cuda', drop_last=True

)

batch_losses = []

for batch_inputv, batch_target in dataset_loader_train:

batch_inputv = batch_inputv.to(device)

batch_target = batch_target.to(device)

optimizer.zero_grad()

output = self.net(batch_inputv)

batch_loss = criterion(output, batch_target)

batch_loss.backward()

optimizer.step()

batch_losses.append(batch_loss.item())

loss = np.mean(batch_losses)

self.train_losses.append(loss)

if not (epoch+1) % 5:

print('\r'+' '*1000, end='', flush=True)

print('\rTrain loss', np.round(loss.item(), 2), end='')

# network evaluation step

self.net.eval()

with torch.no_grad():

pytorch_dataset_val = TensorDataset(val_inputv, val_target)

dataset_loader_val = DataLoader(

pytorch_dataset_val, batch_size=self.batch_size, shuffle=False,

pin_memory=device.type=='cuda', drop_last=False,

)

batch_losses = []

batch_sizes = []

for batch_inputv, batch_target in dataset_loader_val:

batch_inputv = batch_inputv.to(device)

batch_target = batch_target.to(device)

optimizer.zero_grad()

output = self.net(batch_inputv)

batch_loss = criterion(output, batch_target)

batch_losses.append(batch_loss.item())

batch_sizes.append(len(batch_inputv))

loss = np.average(batch_losses, weights=batch_sizes)

self.val_losses.append(loss)

if not (epoch+1) % 5:

print(' | Validation loss', np.round(loss.item(), 2), 'in epoch', epoch + 1, end='')

# Decisions based on the evaluated values

if loss < last_val_loss:

best_state_dict = self.net.state_dict()

best_state_dict = pickle.dumps(best_state_dict)

es_tries = 0

last_val_loss = loss

else:

if es_tries >= 10:

self.net.load_state_dict(pickle.loads(best_state_dict))

break

es_tries += 1

if not (epoch+1) % 5:

print(' | es_tries', es_tries, end='', flush=True)

except KeyboardInterrupt:

if epoch > 0:

print("\nKeyboard interrupt detected.",

"Switching weights to lowest validation loss",

"and exiting")

self.net.load_state_dict(pickle.loads(best_state_dict))

break

print(f"\nOptimization finished in {epoch+1} epochs.")

#def predict_proba(self, x_pred):

#def predict(self, x_pred):

def score(self, x_score, y_score):

return - self.get_loss(x_score, y_score)[0]

def get_loss(self, x_score, y_score):

criterion = nn.CrossEntropyLoss()

if os.environ.get('COLAB_TPU_ADDR') is not None:

device = xm.xla_device()

elif torch.cuda.is_available():

device = torch.device('cuda')

else:

device = torch.device('cpu')

score_inputv = torch.as_tensor(x_score, dtype=torch.float32)

score_target = torch.as_tensor(y_score, dtype=torch.long)

dataset = TensorDataset(score_inputv, score_target)

dataset_loader_train = DataLoader(

pytorch_dataset_train, batch_size=100, shuffle=True,

pin_memory=device.type=='cuda', drop_last=True

)

self.net.eval()

with torch.no_grad():

dataset = DataLoader(

dataset, batch_size=100, shuffle=False,

pin_memory=device.type=='cuda', drop_last=False,

)

cv_batch_losses = []

zo_batch_losses = []

batch_sizes = []

for batch_inputv, batch_target in dataset_loader_val:

batch_inputv = batch_inputv.to(device)

batch_target = batch_target.to(device)

output = self.net(batch_inputv)

cv_batch_loss = criterion(output, batch_target)

zo_batch_loss = (torch.argmax(output, 1) != batch_target).cpu()

zo_batch_loss = np.array(zo_batch_loss).mean()

cv_batch_losses.append(cv_batch_loss.item())

zo_batch_losses.append(zo_batch_loss.item())

batch_sizes.append(len(batch_inputv))

cv_loss = np.average(cv_batch_losses, weights=batch_sizes)

zo_loss = np.average(zo_batch_losses, weights=batch_sizes)

return cv_loss, zo_loss

nn_estimator = NeuralNetEstimator(hsize1 = 5000, hsize2=1000)

nn_estimator.fit(train_inputv, train_target_label)

loss_on_test = nn_estimator.get_loss(test_inputv, test_target_label)

print(f"Loss on test dataset for default parameters: {loss_on_test}")

Starting optimization.

Train loss 0.69 | Validation loss 3.05 in epoch 10 | es_tries 9

Optimization finished in 12 epochs.

Loss on test dataset for default parameters: (1.5783445835113525, 0.5428571428571428)

[23]:

from sklearn.pipeline import Pipeline

import tempfile

import os

import time

tempdir = tempfile.TemporaryDirectory().name

os.mkdir(tempdir)

class CachedPipeline(Pipeline):

def fit(self, x,y):

super().fit(x,y)

filename = self.get_filepath(self.get_params())

with open(filename, 'wb') as file:

pickle.dump(self, file)

return self

def get_filepath(self, dict_):

h = hashlib.new('ripemd160')

dict_ = {k:v for k,v in dict_.items() if '__' in k}

h.update(pickle.dumps(sorted(list(dict_.items()))))

filename = h.hexdigest()

return os.path.join(tempdir, filename)

def reconstruct(self, params):

all_params = self.get_params().copy()

for k, v in params.items():

all_params[k] = v

filename = self.get_filepath(all_params)

with open(filename, 'rb') as file:

return pickle.load(file)

[24]:

from sklearn.model_selection import GridSearchCV, RandomizedSearchCV, ShuffleSplit

from sklearn.preprocessing import StandardScaler

import time

pl_estimator = CachedPipeline((

('scaler', StandardScaler()),

('nn', NeuralNetEstimator()),

))

gs_params = {

'nn__hsize1': [500, 600, 1000, 2000, 3000, 5000, 7000, 8000, 10000],

'nn__hsize2': [100, 500, 750, 1000, 2000, 4000, 6000],

'nn__lr': np.logspace(-0.1, -5),

'nn__weight_decay': np.logspace(-10, 1)-1e-10,

'nn__batch_size': [100, 200],

}

cv = ShuffleSplit(n_splits=1, test_size=0.1, random_state=0)

gs_estimator = RandomizedSearchCV(pl_estimator, gs_params, cv=cv, refit=False, n_iter=300)

start_time = time.time()

gs_estimator.fit(train_inputv, train_target_label)

end_time = time.time()

print(f"Fit process time: {end_time-start_time}")

print(f"Best parameters: {gs_estimator.best_params_}")

# reconstruct best estimator from disk cache

best_nn_estimator = gs_estimator.estimator.reconstruct(gs_estimator.best_params_)

loss_on_test = best_nn_estimator.steps[-1][1].get_loss(

best_nn_estimator.steps[0][1].transform(test_inputv), test_target_label

)

print(f"Loss on test dataset for parameters found using Data splitting: {loss_on_test}")

Starting optimization.

Train loss 2.95 | Validation loss 1.76 in epoch 10 | es_tries 8

Optimization finished in 13 epochs.

Starting optimization.

Train loss 1.13 | Validation loss 2.71 in epoch 35 | es_tries 10

Optimization finished in 36 epochs.

Starting optimization.

Train loss 0.25 | Validation loss 3.05 in epoch 30 | es_tries 7

Optimization finished in 34 epochs.

Starting optimization.

Train loss 0.31 | Validation loss 2.21 in epoch 15 | es_tries 8

Optimization finished in 18 epochs.

Starting optimization.

Train loss 0.36 | Validation loss 2.32 in epoch 20 | es_tries 8

Optimization finished in 23 epochs.

Starting optimization.

Train loss 2.96 | Validation loss 1.8 in epoch 10 | es_tries 9

Optimization finished in 12 epochs.

Starting optimization.

Train loss 0.42 | Validation loss 2.77 in epoch 15 | es_tries 7

Optimization finished in 19 epochs.

Starting optimization.

Train loss 1.39 | Validation loss 30.65 in epoch 20 | es_tries 10 | es_tries 0

Optimization finished in 21 epochs.

Starting optimization.

Train loss 3.2 | Validation loss 2.0 in epoch 10 | es_tries 8

Optimization finished in 13 epochs.

Starting optimization.

Train loss 3.0 | Validation loss 1.62 in epoch 10 | es_tries 9

Optimization finished in 12 epochs.

Starting optimization.

Train loss 0.41 | Validation loss 4.37 in epoch 15 | es_tries 8

Optimization finished in 18 epochs.

Starting optimization.

Train loss 2.96 | Validation loss 1.68 in epoch 15

Optimization finished in 15 epochs.

Starting optimization.

Train loss 1.38 | Validation loss 1.38 in epoch 15 | es_tries 7

Optimization finished in 19 epochs.

Starting optimization.

Train loss 0.82 | Validation loss 2.47 in epoch 15 | es_tries 8

Optimization finished in 18 epochs.

Starting optimization.

Train loss 1.75 | Validation loss 1.54 in epoch 45 | es_tries 7 | Validation loss 1.55 in epoch 20 | es_tries 1

Optimization finished in 49 epochs.

Starting optimization.

Train loss 2.81 | Validation loss 1.47 in epoch 10 | es_tries 7

Optimization finished in 14 epochs.

Starting optimization.

Train loss 2.76 | Validation loss 1.88 in epoch 10 | es_tries 9

Optimization finished in 12 epochs.

Starting optimization.

Train loss 12.28 | Validation loss 89.6 in epoch 30 | Validation loss 82.44 in epoch 10 | es_tries 0

Optimization finished in 30 epochs.

Starting optimization.

Train loss 0.14 | Validation loss 2.94 in epoch 20

Optimization finished in 20 epochs.

Starting optimization.

Train loss 1.61 | Validation loss 2.07 in epoch 15

Optimization finished in 15 epochs.

Starting optimization.

Train loss 3.06 | Validation loss 1.75 in epoch 10 | es_tries 8

Optimization finished in 13 epochs.

Starting optimization.

Train loss 2.66 | Validation loss 1.88 in epoch 10 | es_tries 9

Optimization finished in 12 epochs.

Starting optimization.

Train loss 2.26 | Validation loss 2.04 in epoch 10 | es_tries 7

Optimization finished in 14 epochs.

Starting optimization.

Train loss 3.3 | Validation loss 35.03 in epoch 30 | es_tries 0 | es_tries 0 | es_tries 0

Optimization finished in 30 epochs.

Starting optimization.

Train loss 1.13 | Validation loss 2.82 in epoch 10 | es_tries 9

Optimization finished in 12 epochs.

Starting optimization.

Train loss 1.1 | Validation loss 1.77 in epoch 35 | es_tries 9

Optimization finished in 37 epochs.

Starting optimization.

Train loss 0.84 | Validation loss 5.67 in epoch 15 | es_tries 9

Optimization finished in 17 epochs.

Starting optimization.

Train loss 0.33 | Validation loss 6.02 in epoch 25 | es_tries 10

Optimization finished in 26 epochs.

Starting optimization.

Train loss 0.24 | Validation loss 3.08 in epoch 15 | es_tries 8

Optimization finished in 18 epochs.

Starting optimization.

Train loss 8.72 | Validation loss 17.89 in epoch 45 | es_tries 7

Optimization finished in 49 epochs.

Starting optimization.

Train loss 2.23 | Validation loss 2.16 in epoch 10 | es_tries 8

Optimization finished in 13 epochs.

Starting optimization.

Train loss 1.34 | Validation loss 1.37 in epoch 10 | es_tries 7

Optimization finished in 14 epochs.

Starting optimization.

Train loss 2.29 | Validation loss 1.73 in epoch 10 | es_tries 9

Optimization finished in 12 epochs.

Starting optimization.

Train loss 0.6 | Validation loss 1.81 in epoch 15 | es_tries 7

Optimization finished in 19 epochs.

Starting optimization.

Train loss 0.73 | Validation loss 1.94 in epoch 25

Optimization finished in 25 epochs.

Starting optimization.

Train loss 0.23 | Validation loss 2.4 in epoch 30 | es_tries 10

Optimization finished in 31 epochs.

Starting optimization.

Train loss 0.68 | Validation loss 3.82 in epoch 10 | es_tries 9

Optimization finished in 12 epochs.

Starting optimization.

Train loss 1.52 | Validation loss 3.14 in epoch 10 | es_tries 9

Optimization finished in 12 epochs.

Starting optimization.

Train loss 0.35 | Validation loss 6.04 in epoch 25 | es_tries 10

Optimization finished in 26 epochs.

Starting optimization.

Train loss 1.38 | Validation loss 1.37 in epoch 55 | es_tries 9

Optimization finished in 57 epochs.

Starting optimization.

Train loss 1.28 | Validation loss 2.57 in epoch 10 | es_tries 9

Optimization finished in 12 epochs.

Starting optimization.

Train loss 1.18 | Validation loss 1.36 in epoch 30 | es_tries 8

Optimization finished in 33 epochs.

Starting optimization.

Train loss 2.14 | Validation loss 3.35 in epoch 10 | es_tries 9

Optimization finished in 12 epochs.

Starting optimization.

Train loss 1.63 | Validation loss 2.42 in epoch 10 | es_tries 9

Optimization finished in 12 epochs.

Starting optimization.

Train loss 154.6 | Validation loss 1271.43 in epoch 15 | es_tries 7

Optimization finished in 19 epochs.

Starting optimization.

Train loss 0.65 | Validation loss 1.78 in epoch 15 | es_tries 9

Optimization finished in 17 epochs.

Starting optimization.

Train loss 3.04 | Validation loss 1.71 in epoch 10 | es_tries 7

Optimization finished in 14 epochs.

Starting optimization.

Train loss 1.3 | Validation loss 2.63 in epoch 10 | es_tries 9

Optimization finished in 12 epochs.

Starting optimization.

Train loss 0.26 | Validation loss 2.97 in epoch 30

Optimization finished in 30 epochs.

Starting optimization.

Train loss 1.32 | Validation loss 1.38 in epoch 25

Optimization finished in 25 epochs.

Starting optimization.

Train loss 2.98 | Validation loss 1.77 in epoch 10 | es_tries 8

Optimization finished in 13 epochs.

Starting optimization.

Train loss 1.09 | Validation loss 1.56 in epoch 90 | es_tries 8

Optimization finished in 93 epochs.

Starting optimization.

Train loss 1.97 | Validation loss 2.07 in epoch 10 | es_tries 8

Optimization finished in 13 epochs.

Starting optimization.

Train loss 2.4 | Validation loss 1.83 in epoch 10 | es_tries 9

Optimization finished in 12 epochs.

Starting optimization.

Train loss 2.45 | Validation loss 2.08 in epoch 10 | es_tries 9

Optimization finished in 12 epochs.

Starting optimization.

Train loss 1.99 | Validation loss 2.29 in epoch 10 | es_tries 9

Optimization finished in 12 epochs.

Starting optimization.

Train loss 9.46 | Validation loss 177.9 in epoch 20 | es_tries 7

Optimization finished in 24 epochs.

Starting optimization.

Train loss 0.99 | Validation loss 5.81 in epoch 30

Optimization finished in 30 epochs.

Starting optimization.

Train loss 0.21 | Validation loss 3.73 in epoch 15 | es_tries 10

Optimization finished in 16 epochs.

Starting optimization.

Train loss 2.37 | Validation loss 1.75 in epoch 10 | es_tries 9

Optimization finished in 12 epochs.

Starting optimization.

Train loss 3.28 | Validation loss 2.22 in epoch 10 | es_tries 8

Optimization finished in 13 epochs.

Starting optimization.

Train loss 0.61 | Validation loss 1.83 in epoch 20 | es_tries 7 | Validation loss 2.04 in epoch 5 | es_tries 0

Optimization finished in 24 epochs.

Starting optimization.

Train loss 2.27 | Validation loss 1.94 in epoch 10 | es_tries 7

Optimization finished in 14 epochs.

Starting optimization.

Train loss 134.55 | Validation loss 707.65 in epoch 60 | es_tries 10 | es_tries 0 | es_tries 0 | es_tries 0

Optimization finished in 61 epochs.

Starting optimization.

Train loss 0.5 | Validation loss 8.54 in epoch 20 | es_tries 9

Optimization finished in 22 epochs.

Starting optimization.

Train loss 0.38 | Validation loss 3.37 in epoch 20 | es_tries 10

Optimization finished in 21 epochs.

Starting optimization.

Train loss 0.37 | Validation loss 10.55 in epoch 20 | es_tries 10 | es_tries 0

Optimization finished in 21 epochs.

Starting optimization.

Train loss 0.67 | Validation loss 1.88 in epoch 15 | es_tries 7

Optimization finished in 19 epochs.

Starting optimization.

Train loss 1.22 | Validation loss 1.38 in epoch 25 | es_tries 0

Optimization finished in 25 epochs.

Starting optimization.

Train loss 1.15 | Validation loss 6.96 in epoch 20

Optimization finished in 20 epochs.

Starting optimization.

Train loss 3.03 | Validation loss 1.73 in epoch 10 | es_tries 9

Optimization finished in 12 epochs.

Starting optimization.

Train loss 1.75 | Validation loss 2.41 in epoch 10 | es_tries 9

Optimization finished in 12 epochs.

Starting optimization.

Train loss 2.85 | Validation loss 41.57 in epoch 15 | es_tries 9

Optimization finished in 17 epochs.

Starting optimization.

Train loss 0.7 | Validation loss 6.71 in epoch 40

Optimization finished in 40 epochs.

Starting optimization.

Train loss 1.18 | Validation loss 1.38 in epoch 60 | Validation loss 3.89 in epoch 10 | es_tries 4 | es_tries 0

Optimization finished in 60 epochs.

Starting optimization.

Train loss 2.0 | Validation loss 4.84 in epoch 10 | es_tries 9

Optimization finished in 12 epochs.

Starting optimization.

Train loss 2.66 | Validation loss 1.98 in epoch 10 | es_tries 9

Optimization finished in 12 epochs.

Starting optimization.

Train loss 0.86 | Validation loss 6.34 in epoch 35 | es_tries 10

Optimization finished in 36 epochs.

Starting optimization.

Train loss 0.12 | Validation loss 4.37 in epoch 15

Optimization finished in 15 epochs.

Starting optimization.

Train loss 0.19 | Validation loss 4.04 in epoch 15 | es_tries 9

Optimization finished in 17 epochs.

Starting optimization.

Train loss 1.25 | Validation loss 1.4 in epoch 40 | es_tries 7 | Validation loss 1.37 in epoch 25 | es_tries 0

Optimization finished in 44 epochs.

Starting optimization.

Train loss 0.3 | Validation loss 4.7 in epoch 30

Optimization finished in 30 epochs.

Starting optimization.

Train loss 1.16 | Validation loss 1.54 in epoch 20 | es_tries 9

Optimization finished in 22 epochs.

Starting optimization.

Train loss 1.33 | Validation loss 1.32 in epoch 15 | es_tries 8

Optimization finished in 18 epochs.

Starting optimization.

Train loss 1.16 | Validation loss 13.75 in epoch 40 | es_tries 9

Optimization finished in 42 epochs.

Starting optimization.

Train loss 1.85 | Validation loss 4.09 in epoch 10 | es_tries 9

Optimization finished in 12 epochs.

Starting optimization.

Train loss 0.13 | Validation loss 2.98 in epoch 35 | es_tries 9

Optimization finished in 37 epochs.

Starting optimization.

Train loss 2.23 | Validation loss 39.14 in epoch 15 | es_tries 8 | es_tries 0

Optimization finished in 18 epochs.

Starting optimization.

Train loss 0.5 | Validation loss 2.05 in epoch 15 | es_tries 8

Optimization finished in 18 epochs.

Starting optimization.

Train loss 2.3 | Validation loss 1.64 in epoch 10 | es_tries 8

Optimization finished in 13 epochs.

Starting optimization.

Train loss 2.8 | Validation loss 1.97 in epoch 10 | es_tries 9

Optimization finished in 12 epochs.

Starting optimization.

Train loss 0.56 | Validation loss 2.09 in epoch 15 | es_tries 9 | es_tries 0

Optimization finished in 17 epochs.

Starting optimization.

Train loss 1.3 | Validation loss 29.15 in epoch 25 | es_tries 9

Optimization finished in 27 epochs.

Starting optimization.

Train loss 1.86 | Validation loss 2.62 in epoch 10 | es_tries 9

Optimization finished in 12 epochs.

Starting optimization.

Train loss 0.13 | Validation loss 4.06 in epoch 30 | es_tries 10

Optimization finished in 31 epochs.

Starting optimization.

Train loss 25.97 | Validation loss 324.45 in epoch 25 | es_tries 8

Optimization finished in 28 epochs.

Starting optimization.

Train loss 0.93 | Validation loss 3.3 in epoch 10 | es_tries 9

Optimization finished in 12 epochs.

Starting optimization.

Train loss 1.61 | Validation loss 3.43 in epoch 10 | es_tries 9

Optimization finished in 12 epochs.

Starting optimization.

Train loss 3.01 | Validation loss 1.77 in epoch 10 | es_tries 8

Optimization finished in 13 epochs.

Starting optimization.

Train loss 0.73 | Validation loss 2.66 in epoch 15 | es_tries 9 | es_tries 0

Optimization finished in 17 epochs.

Starting optimization.

Train loss 3.26 | Validation loss 2.09 in epoch 10 | es_tries 9

Optimization finished in 12 epochs.

Starting optimization.

Train loss 2.32 | Validation loss 1.84 in epoch 10 | es_tries 9

Optimization finished in 12 epochs.

Starting optimization.

Train loss 0.28 | Validation loss 2.69 in epoch 25 | es_tries 9

Optimization finished in 27 epochs.

Starting optimization.

Train loss 2.14 | Validation loss 1.69 in epoch 10 | es_tries 7

Optimization finished in 14 epochs.

Starting optimization.

Train loss 1.73 | Validation loss 2.97 in epoch 10 | es_tries 9

Optimization finished in 12 epochs.

Starting optimization.

Train loss 0.44 | Validation loss 2.81 in epoch 15 | es_tries 8

Optimization finished in 18 epochs.

Starting optimization.

Train loss 2.11 | Validation loss 3.57 in epoch 10 | es_tries 9

Optimization finished in 12 epochs.

Starting optimization.

Train loss 1.31 | Validation loss 1.5 in epoch 40 | es_tries 10

Optimization finished in 41 epochs.

Starting optimization.

Train loss 1.82 | Validation loss 2.88 in epoch 10 | es_tries 9

Optimization finished in 12 epochs.

Starting optimization.

Train loss 0.14 | Validation loss 3.28 in epoch 35

Optimization finished in 35 epochs.

Starting optimization.

Train loss 1.75 | Validation loss 37.85 in epoch 35

Optimization finished in 35 epochs.

Starting optimization.

Train loss 5.74 | Validation loss 7.63 in epoch 35

Optimization finished in 35 epochs.

Starting optimization.

Train loss 2.68 | Validation loss 1.82 in epoch 10 | es_tries 9

Optimization finished in 12 epochs.

Starting optimization.

Train loss 2.33 | Validation loss 3.45 in epoch 10 | es_tries 9

Optimization finished in 12 epochs.

Starting optimization.

Train loss 1.74 | Validation loss 20.01 in epoch 20 | es_tries 7

Optimization finished in 24 epochs.

Starting optimization.

Train loss 1.76 | Validation loss 2.18 in epoch 10 | es_tries 8

Optimization finished in 13 epochs.

Starting optimization.

Train loss 3.11 | Validation loss 2.06 in epoch 10 | es_tries 9

Optimization finished in 12 epochs.

Starting optimization.

Train loss 1.42 | Validation loss 1.68 in epoch 20 | es_tries 7

Optimization finished in 24 epochs.

Starting optimization.

Train loss 2.26 | Validation loss 1.6 in epoch 10 | es_tries 9

Optimization finished in 12 epochs.

Starting optimization.

Train loss 3.19 | Validation loss 1.85 in epoch 10 | es_tries 7

Optimization finished in 14 epochs.

Starting optimization.

Train loss 1.29 | Validation loss 1.33 in epoch 30

Optimization finished in 30 epochs.

Starting optimization.

Train loss 0.57 | Validation loss 2.2 in epoch 15

Optimization finished in 15 epochs.

Starting optimization.

Train loss 2.26 | Validation loss 2.4 in epoch 10 | es_tries 9

Optimization finished in 12 epochs.

Starting optimization.

Train loss 42.78 | Validation loss 170.76 in epoch 20 | es_tries 7

Optimization finished in 24 epochs.

Starting optimization.

Train loss 1.96 | Validation loss 1.64 in epoch 15 | es_tries 10

Optimization finished in 16 epochs.

Starting optimization.

Train loss 21.74 | Validation loss 138.23 in epoch 15 | es_tries 7

Optimization finished in 19 epochs.

Starting optimization.

Train loss 2.26 | Validation loss 1.8 in epoch 10 | es_tries 8

Optimization finished in 13 epochs.

Starting optimization.

Train loss 0.14 | Validation loss 3.21 in epoch 15 | es_tries 8

Optimization finished in 18 epochs.

Starting optimization.

Train loss 2.95 | Validation loss 1.76 in epoch 10 | es_tries 9

Optimization finished in 12 epochs.

Starting optimization.

Train loss 2.05 | Validation loss 2.32 in epoch 10 | es_tries 9

Optimization finished in 12 epochs.

Starting optimization.

Train loss 0.91 | Validation loss 2.96 in epoch 15 | es_tries 7

Optimization finished in 19 epochs.

Starting optimization.

Train loss 1.15 | Validation loss 1.47 in epoch 80 | es_tries 10 | Validation loss 2.82 in epoch 30 | es_tries 2 | es_tries 0

Optimization finished in 81 epochs.

Starting optimization.

Train loss 2.74 | Validation loss 1.83 in epoch 10 | es_tries 9

Optimization finished in 12 epochs.

Starting optimization.

Train loss 1.35 | Validation loss 1.35 in epoch 25 | es_tries 0

Optimization finished in 25 epochs.

Starting optimization.

Train loss 0.28 | Validation loss 3.29 in epoch 15 | es_tries 8

Optimization finished in 18 epochs.

Starting optimization.

Train loss 2.96 | Validation loss 1.89 in epoch 10 | es_tries 8

Optimization finished in 13 epochs.

Starting optimization.

Train loss 2.14 | Validation loss 2.48 in epoch 10 | es_tries 8

Optimization finished in 13 epochs.

Starting optimization.

Train loss 1.34 | Validation loss 2.2 in epoch 10 | es_tries 9

Optimization finished in 12 epochs.

Starting optimization.

Train loss 1.9 | Validation loss 2.48 in epoch 10 | es_tries 9

Optimization finished in 12 epochs.

Starting optimization.

Train loss 1.63 | Validation loss 1.66 in epoch 25 | es_tries 10

Optimization finished in 26 epochs.

Starting optimization.

Train loss 2.43 | Validation loss 1.48 in epoch 10 | es_tries 7

Optimization finished in 14 epochs.

Starting optimization.

Train loss 15.44 | Validation loss 16.36 in epoch 40 | es_tries 9

Optimization finished in 42 epochs.

Starting optimization.

Train loss 0.89 | Validation loss 1.66 in epoch 25 | es_tries 10

Optimization finished in 26 epochs.

Starting optimization.

Train loss 0.64 | Validation loss 2.31 in epoch 35 | es_tries 7

Optimization finished in 39 epochs.

Starting optimization.

Train loss 3.05 | Validation loss 1.79 in epoch 10 | es_tries 8

Optimization finished in 13 epochs.

Starting optimization.

Train loss 2.21 | Validation loss 2.5 in epoch 10 | es_tries 9

Optimization finished in 12 epochs.

Starting optimization.

Train loss 1.44 | Validation loss 2.46 in epoch 25 | es_tries 9

Optimization finished in 27 epochs.

Starting optimization.

Train loss 1.89 | Validation loss 2.15 in epoch 10 | es_tries 9

Optimization finished in 12 epochs.

Starting optimization.

Train loss 1.52 | Validation loss 1.56 in epoch 20 | es_tries 10

Optimization finished in 21 epochs.

Starting optimization.

Train loss 2.52 | Validation loss 58.04 in epoch 20 | es_tries 9 | es_tries 0 | es_tries 0

Optimization finished in 22 epochs.

Starting optimization.

Train loss 2.35 | Validation loss 5.39 in epoch 55 | es_tries 10

Optimization finished in 56 epochs.

Starting optimization.

Train loss 2.11 | Validation loss 1.66 in epoch 20 | es_tries 8 | es_tries 0

Optimization finished in 23 epochs.

Starting optimization.

Train loss 0.23 | Validation loss 4.73 in epoch 25

Optimization finished in 25 epochs.

Starting optimization.

Train loss 0.36 | Validation loss 4.0 in epoch 15 | es_tries 7

Optimization finished in 19 epochs.

Starting optimization.

Train loss 0.17 | Validation loss 3.86 in epoch 35

Optimization finished in 35 epochs.

Starting optimization.

Train loss 1.45 | Validation loss 1.44 in epoch 45 | es_tries 7 | Validation loss 1.56 in epoch 20 | es_tries 0

Optimization finished in 49 epochs.

Starting optimization.

Train loss 131.98 | Validation loss 811.72 in epoch 15 | es_tries 7

Optimization finished in 19 epochs.

Starting optimization.

Train loss 2.13 | Validation loss 1.55 in epoch 50 | es_tries 8

Optimization finished in 53 epochs.

Starting optimization.

Train loss 0.25 | Validation loss 2.63 in epoch 25 | es_tries 10

Optimization finished in 26 epochs.

Starting optimization.

Train loss 2.01 | Validation loss 2.62 in epoch 10 | es_tries 9

Optimization finished in 12 epochs.

Starting optimization.

Train loss 1.65 | Validation loss 2.75 in epoch 10 | es_tries 9

Optimization finished in 12 epochs.

Starting optimization.

Train loss 2.63 | Validation loss 1.72 in epoch 10 | es_tries 9

Optimization finished in 12 epochs.

Starting optimization.

Train loss 0.26 | Validation loss 2.5 in epoch 15

Optimization finished in 15 epochs.

Starting optimization.

Train loss 1.01 | Validation loss 12.78 in epoch 15 | es_tries 7

Optimization finished in 19 epochs.

Starting optimization.

Train loss 1.38 | Validation loss 1.61 in epoch 50 | Validation loss 1.91 in epoch 40 | es_tries 1

Optimization finished in 50 epochs.

Starting optimization.

Train loss 0.36 | Validation loss 3.41 in epoch 15 | es_tries 10

Optimization finished in 16 epochs.

Starting optimization.

Train loss 2.24 | Validation loss 1.85 in epoch 25 | es_tries 7

Optimization finished in 29 epochs.

Starting optimization.

Train loss 0.65 | Validation loss 2.29 in epoch 20 | es_tries 9 | Validation loss 2.02 in epoch 15 | es_tries 4

Optimization finished in 22 epochs.

Starting optimization.

Train loss 0.8 | Validation loss 2.47 in epoch 20 | es_tries 9

Optimization finished in 22 epochs.

Starting optimization.

Train loss 1.33 | Validation loss 1.31 in epoch 15 | es_tries 8

Optimization finished in 18 epochs.

Starting optimization.

Train loss 281.12 | Validation loss 1941.94 in epoch 20 | es_tries 10

Optimization finished in 21 epochs.

Starting optimization.

Train loss 0.93 | Validation loss 7.25 in epoch 20 | es_tries 9

Optimization finished in 22 epochs.

Starting optimization.

Train loss 2.84 | Validation loss 2.08 in epoch 10 | es_tries 9

Optimization finished in 12 epochs.

Starting optimization.

Train loss 1.67 | Validation loss 2.77 in epoch 10 | es_tries 9

Optimization finished in 12 epochs.

Starting optimization.

Train loss 1.37 | Validation loss 1.36 in epoch 15 | es_tries 10

Optimization finished in 16 epochs.

Starting optimization.

Train loss 1.45 | Validation loss 5.1 in epoch 15

Optimization finished in 15 epochs.

Starting optimization.

Train loss 0.5 | Validation loss 1.97 in epoch 25

Optimization finished in 25 epochs.

Starting optimization.

Train loss 1.7 | Validation loss 3.49 in epoch 75 | es_tries 10

Optimization finished in 76 epochs.

Starting optimization.

Train loss 2.13 | Validation loss 2.38 in epoch 10 | es_tries 9

Optimization finished in 12 epochs.

Starting optimization.

Train loss 0.19 | Validation loss 4.58 in epoch 25 | es_tries 9

Optimization finished in 27 epochs.

Starting optimization.

Train loss 0.29 | Validation loss 2.78 in epoch 15 | es_tries 8

Optimization finished in 18 epochs.

Starting optimization.

Train loss 1.34 | Validation loss 1.32 in epoch 20 | es_tries 9

Optimization finished in 22 epochs.

Starting optimization.

Train loss 3.15 | Validation loss 1.73 in epoch 10 | es_tries 9

Optimization finished in 12 epochs.

Starting optimization.

Train loss 3.01 | Validation loss 1.9 in epoch 10 | es_tries 7

Optimization finished in 14 epochs.

Starting optimization.

Train loss 2.29 | Validation loss 2.15 in epoch 10 | es_tries 9

Optimization finished in 12 epochs.

Starting optimization.

Train loss 0.73 | Validation loss 2.63 in epoch 20 | es_tries 8

Optimization finished in 23 epochs.

Starting optimization.

Train loss 1.28 | Validation loss 1.27 in epoch 20 | es_tries 10 | es_tries 0 | es_tries 0

Optimization finished in 21 epochs.

Starting optimization.

Train loss 5.33 | Validation loss 7.66 in epoch 50 | es_tries 8

Optimization finished in 53 epochs.

Starting optimization.

Train loss 3.05 | Validation loss 2.04 in epoch 10 | es_tries 9

Optimization finished in 12 epochs.

Starting optimization.

Train loss 2.9 | Validation loss 1.86 in epoch 10 | es_tries 9

Optimization finished in 12 epochs.

Starting optimization.

Train loss 1.85 | Validation loss 2.91 in epoch 10 | es_tries 9

Optimization finished in 12 epochs.

Starting optimization.

Train loss 1.42 | Validation loss 2.67 in epoch 10 | es_tries 9

Optimization finished in 12 epochs.

Starting optimization.

Train loss 2.21 | Validation loss 1.7 in epoch 30

Optimization finished in 30 epochs.

Starting optimization.

Train loss 0.79 | Validation loss 1.78 in epoch 15 | es_tries 10

Optimization finished in 16 epochs.

Starting optimization.

Train loss 2.27 | Validation loss 1.99 in epoch 10 | es_tries 8

Optimization finished in 13 epochs.

Starting optimization.

Train loss 2.72 | Validation loss 1.93 in epoch 10 | es_tries 9

Optimization finished in 12 epochs.

Starting optimization.

Train loss 2.05 | Validation loss 2.14 in epoch 10 | es_tries 8

Optimization finished in 13 epochs.

Starting optimization.

Train loss 1.36 | Validation loss 1.36 in epoch 10 | es_tries 7

Optimization finished in 14 epochs.

Starting optimization.

Train loss 1.38 | Validation loss 3.12 in epoch 10 | es_tries 9

Optimization finished in 12 epochs.

Starting optimization.

Train loss 3.04 | Validation loss 1.55 in epoch 10 | es_tries 7

Optimization finished in 14 epochs.

Starting optimization.

Train loss 1.91 | Validation loss 1.91 in epoch 10 | es_tries 9

Optimization finished in 12 epochs.

Starting optimization.

Train loss 2.36 | Validation loss 1.76 in epoch 10 | es_tries 9

Optimization finished in 12 epochs.

Starting optimization.

Train loss 1.03 | Validation loss 13.57 in epoch 20 | es_tries 7 | es_tries 0 | es_tries 0

Optimization finished in 24 epochs.

Starting optimization.

Train loss 2.05 | Validation loss 2.33 in epoch 10 | es_tries 8

Optimization finished in 13 epochs.

Starting optimization.

Train loss 2.3 | Validation loss 1.94 in epoch 10 | es_tries 9

Optimization finished in 12 epochs.

Starting optimization.

Train loss 2.87 | Validation loss 7.8 in epoch 30 | es_tries 10

Optimization finished in 31 epochs.

Starting optimization.

Train loss 2.43 | Validation loss 1.73 in epoch 10 | es_tries 9

Optimization finished in 12 epochs.

Starting optimization.

Train loss 5.13 | Validation loss 26.71 in epoch 20 | es_tries 7

Optimization finished in 24 epochs.

Starting optimization.

Train loss 0.42 | Validation loss 3.68 in epoch 20 | es_tries 7

Optimization finished in 24 epochs.

Starting optimization.

Train loss 0.27 | Validation loss 2.84 in epoch 15 | es_tries 8

Optimization finished in 18 epochs.

Starting optimization.

Train loss 1.47 | Validation loss 2.24 in epoch 10 | es_tries 9

Optimization finished in 12 epochs.

Starting optimization.

Train loss 1.33 | Validation loss 1.31 in epoch 35 | es_tries 7

Optimization finished in 39 epochs.

Starting optimization.

Train loss 3.06 | Validation loss 2.03 in epoch 10 | es_tries 9

Optimization finished in 12 epochs.

Starting optimization.

Train loss 0.36 | Validation loss 3.17 in epoch 20

Optimization finished in 20 epochs.

Starting optimization.

Train loss 0.65 | Validation loss 1.95 in epoch 20

Optimization finished in 20 epochs.

Starting optimization.

Train loss 1.11 | Validation loss 2.27 in epoch 30

Optimization finished in 30 epochs.

Starting optimization.

Train loss 1.84 | Validation loss 1.81 in epoch 10 | es_tries 9

Optimization finished in 12 epochs.

Starting optimization.

Train loss 1.07 | Validation loss 1.49 in epoch 15

Optimization finished in 15 epochs.

Starting optimization.

Train loss 0.63 | Validation loss 3.78 in epoch 20 | es_tries 8

Optimization finished in 23 epochs.

Starting optimization.

Train loss 1.68 | Validation loss 1.38 in epoch 15 | es_tries 8

Optimization finished in 18 epochs.

Starting optimization.

Train loss 1.41 | Validation loss 1.39 in epoch 10 | es_tries 8

Optimization finished in 13 epochs.

Starting optimization.

Train loss 0.82 | Validation loss 4.11 in epoch 35 | es_tries 10

Optimization finished in 36 epochs.

Starting optimization.

Train loss 0.81 | Validation loss 17.45 in epoch 20 | es_tries 8

Optimization finished in 23 epochs.

Starting optimization.

Train loss 2.45 | Validation loss 1.61 in epoch 15 | es_tries 10

Optimization finished in 16 epochs.

Starting optimization.

Train loss 0.94 | Validation loss 1.7 in epoch 30

Optimization finished in 30 epochs.

Starting optimization.

Train loss 1.84 | Validation loss 1.46 in epoch 25

Optimization finished in 25 epochs.

Starting optimization.

Train loss 6.22 | Validation loss 61.77 in epoch 15 | es_tries 8

Optimization finished in 18 epochs.

Starting optimization.

Train loss 6.08 | Validation loss 33.06 in epoch 30

Optimization finished in 30 epochs.

Starting optimization.

Train loss 1.97 | Validation loss 3.22 in epoch 10 | es_tries 9

Optimization finished in 12 epochs.

Starting optimization.

Train loss 19.43 | Validation loss 226.66 in epoch 15 | es_tries 8 | es_tries 0

Optimization finished in 18 epochs.

Starting optimization.

Train loss 1.32 | Validation loss 2.44 in epoch 10 | es_tries 9

Optimization finished in 12 epochs.

Starting optimization.

Train loss 1.77 | Validation loss 1.92 in epoch 10 | es_tries 9

Optimization finished in 12 epochs.

Starting optimization.

Train loss 2.17 | Validation loss 2.22 in epoch 10 | es_tries 9

Optimization finished in 12 epochs.

Starting optimization.

Train loss 0.72 | Validation loss 2.23 in epoch 60 | es_tries 10

Optimization finished in 61 epochs.

Starting optimization.

Train loss 251.84 | Validation loss 421.51 in epoch 20 | es_tries 9 10 | es_tries 3 | Validation loss 436.76 in epoch 15 | es_tries 4

Optimization finished in 22 epochs.

Starting optimization.

Train loss 1.0 | Validation loss 1.75 in epoch 25

Optimization finished in 25 epochs.

Starting optimization.

Train loss 1.65 | Validation loss 3.5 in epoch 10 | es_tries 9

Optimization finished in 12 epochs.

Starting optimization.

Train loss 2.0 | Validation loss 2.55 in epoch 10 | es_tries 9

Optimization finished in 12 epochs.

Starting optimization.

Train loss 1.72 | Validation loss 3.08 in epoch 10 | es_tries 9

Optimization finished in 12 epochs.

Starting optimization.

Train loss 6.69 | Validation loss 92.75 in epoch 15 | es_tries 8 | es_tries 0

Optimization finished in 18 epochs.

Starting optimization.

Train loss 0.31 | Validation loss 3.33 in epoch 20 | es_tries 8

Optimization finished in 23 epochs.

Starting optimization.

Train loss 1.24 | Validation loss 2.94 in epoch 10 | es_tries 7

Optimization finished in 14 epochs.

Starting optimization.

Train loss 2.07 | Validation loss 2.09 in epoch 10 | es_tries 8

Optimization finished in 13 epochs.

Starting optimization.

Train loss 0.52 | Validation loss 2.43 in epoch 25 | es_tries 10

Optimization finished in 26 epochs.

Starting optimization.

Train loss 2.06 | Validation loss 1.95 in epoch 10 | es_tries 9

Optimization finished in 12 epochs.

Starting optimization.

Train loss 0.72 | Validation loss 6.0 in epoch 15

Optimization finished in 15 epochs.

Starting optimization.

Train loss 2.13 | Validation loss 2.3 in epoch 10 | es_tries 9

Optimization finished in 12 epochs.

Starting optimization.

Train loss 1.35 | Validation loss 2.82 in epoch 10 | es_tries 9

Optimization finished in 12 epochs.

Starting optimization.

Train loss 131.53 | Validation loss 497.76 in epoch 15 | es_tries 7

Optimization finished in 19 epochs.

Starting optimization.

Train loss 2.61 | Validation loss 1.76 in epoch 10 | es_tries 9

Optimization finished in 12 epochs.

Starting optimization.

Train loss 2.31 | Validation loss 1.94 in epoch 10 | es_tries 9

Optimization finished in 12 epochs.

Starting optimization.

Train loss 3.25 | Validation loss 1.75 in epoch 10 | es_tries 8

Optimization finished in 13 epochs.

Starting optimization.

Train loss 1.87 | Validation loss 17.84 in epoch 25 | es_tries 8

Optimization finished in 28 epochs.

Starting optimization.

Train loss 1.12 | Validation loss 1.4 in epoch 25 | es_tries 8

Optimization finished in 28 epochs.

Starting optimization.

Train loss 0.72 | Validation loss 2.2 in epoch 20 | es_tries 7

Optimization finished in 24 epochs.

Starting optimization.

Train loss 5.89 | Validation loss 5.79 in epoch 60 | es_tries 10

Optimization finished in 61 epochs.

Starting optimization.

Train loss 1.8 | Validation loss 3.46 in epoch 10 | es_tries 9

Optimization finished in 12 epochs.

Starting optimization.

Train loss 2.3 | Validation loss 1.54 in epoch 20

Optimization finished in 20 epochs.

Starting optimization.

Train loss 0.18 | Validation loss 4.03 in epoch 20

Optimization finished in 20 epochs.

Starting optimization.

Train loss 1.58 | Validation loss 1.64 in epoch 35 | es_tries 10

Optimization finished in 36 epochs.

Starting optimization.

Train loss 1.27 | Validation loss 2.74 in epoch 10 | es_tries 9

Optimization finished in 12 epochs.

Starting optimization.

Train loss 1.86 | Validation loss 2.29 in epoch 10 | es_tries 9

Optimization finished in 12 epochs.

Starting optimization.

Train loss 1.91 | Validation loss 2.6 in epoch 10 | es_tries 9

Optimization finished in 12 epochs.

Starting optimization.

Train loss 2.79 | Validation loss 5.68 in epoch 10 | es_tries 9

Optimization finished in 12 epochs.

Starting optimization.

Train loss 0.62 | Validation loss 2.69 in epoch 15 | es_tries 9 | Validation loss 3.11 in epoch 10 | es_tries 4

Optimization finished in 17 epochs.

Starting optimization.

Train loss 0.28 | Validation loss 2.84 in epoch 15

Optimization finished in 15 epochs.

Starting optimization.

Train loss 1.53 | Validation loss 1.67 in epoch 40 | es_tries 8

Optimization finished in 43 epochs.

Starting optimization.

Train loss 2.65 | Validation loss 1.8 in epoch 10 | es_tries 9

Optimization finished in 12 epochs.

Starting optimization.

Train loss 1.39 | Validation loss 1.38 in epoch 30 | es_tries 10

Optimization finished in 31 epochs.

Starting optimization.

Train loss 2.0 | Validation loss 2.18 in epoch 10 | es_tries 9

Optimization finished in 12 epochs.

Starting optimization.

Train loss 1.47 | Validation loss 3.32 in epoch 10 | es_tries 9

Optimization finished in 12 epochs.

Starting optimization.

Train loss 1.44 | Validation loss 1.46 in epoch 30 | es_tries 7

Optimization finished in 34 epochs.

Starting optimization.

Train loss 1.73 | Validation loss 3.79 in epoch 10 | es_tries 9

Optimization finished in 12 epochs.

Starting optimization.

Train loss 2.14 | Validation loss 3.64 in epoch 10 | es_tries 9

Optimization finished in 12 epochs.

Starting optimization.

Train loss 0.5 | Validation loss 2.19 in epoch 25 | es_tries 7

Optimization finished in 29 epochs.

Starting optimization.

Train loss 3.23 | Validation loss 1.59 in epoch 10 | es_tries 9

Optimization finished in 12 epochs.

Starting optimization.

Train loss 0.42 | Validation loss 2.68 in epoch 20 | es_tries 7

Optimization finished in 24 epochs.

Starting optimization.

Train loss 1.29 | Validation loss 1.42 in epoch 20 | es_tries 10

Optimization finished in 21 epochs.

Starting optimization.

Train loss 1.71 | Validation loss 3.01 in epoch 10 | es_tries 9

Optimization finished in 12 epochs.

Starting optimization.

Train loss 1.32 | Validation loss 1.34 in epoch 15 | es_tries 10

Optimization finished in 16 epochs.

Starting optimization.

Train loss 19.81 | Validation loss 838.07 in epoch 20 | es_tries 9

Optimization finished in 22 epochs.

Starting optimization.

Train loss 2.12 | Validation loss 44.61 in epoch 30 | es_tries 9

Optimization finished in 32 epochs.

Starting optimization.

Train loss 0.72 | Validation loss 2.23 in epoch 20

Optimization finished in 20 epochs.

Starting optimization.

Train loss 13.7 | Validation loss 202.22 in epoch 20 | es_tries 8

Optimization finished in 23 epochs.

Starting optimization.

Train loss 1.14 | Validation loss 2.12 in epoch 45 | es_tries 7

Optimization finished in 49 epochs.

Starting optimization.

Train loss 2.57 | Validation loss 1.87 in epoch 10 | es_tries 9

Optimization finished in 12 epochs.

Starting optimization.

Train loss 1.55 | Validation loss 21.46 in epoch 25 | es_tries 7 | es_tries 0 | es_tries 0

Optimization finished in 29 epochs.

Starting optimization.

Train loss 0.32 | Validation loss 9.1 in epoch 25 | es_tries 8

Optimization finished in 28 epochs.

Starting optimization.

Train loss 0.54 | Validation loss 2.41 in epoch 35 | es_tries 9

Optimization finished in 37 epochs.

Starting optimization.

Train loss 0.78 | Validation loss 1.7 in epoch 25 | es_tries 9

Optimization finished in 27 epochs.

Starting optimization.

Train loss 2.09 | Validation loss 2.07 in epoch 10 | es_tries 9

Optimization finished in 12 epochs.

Starting optimization.

Train loss 2.22 | Validation loss 2.16 in epoch 10 | es_tries 8

Optimization finished in 13 epochs.

Starting optimization.

Train loss 0.31 | Validation loss 2.93 in epoch 70

Optimization finished in 70 epochs.

Starting optimization.

Train loss 112.98 | Validation loss 1076.71 in epoch 15 | es_tries 8 | es_tries 0

Optimization finished in 18 epochs.

Starting optimization.

Train loss 1.04 | Validation loss 11.22 in epoch 15 | es_tries 9

Optimization finished in 17 epochs.

Starting optimization.

Train loss 3.19 | Validation loss 1.94 in epoch 10 | es_tries 9

Optimization finished in 12 epochs.

Starting optimization.

Train loss 1.92 | Validation loss 2.51 in epoch 10 | es_tries 9

Optimization finished in 12 epochs.

Starting optimization.

Train loss 0.76 | Validation loss 2.37 in epoch 20

Optimization finished in 20 epochs.

Starting optimization.

Train loss 0.11 | Validation loss 5.14 in epoch 25

Optimization finished in 25 epochs.

Starting optimization.

Train loss 2.74 | Validation loss 1.68 in epoch 10 | es_tries 8

Optimization finished in 13 epochs.

Fit process time: 557.2798354625702

Best parameters: {'nn__weight_decay': 1.4563384775012444e-05, 'nn__lr': 0.001995262314968879, 'nn__hsize2': 750, 'nn__hsize1': 5000, 'nn__batch_size': 100}

Loss on test dataset for parameters found using Data splitting: (0.3876900374889374, 0.08571428571428572)